September 26, 2017

[lliveblog][PAIR] Antonio Torralba on machine vision, human vision

At the PAIR Symposium, Antonio Torralba asks why image identification has traditionally gone so wrong.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

If we train our data on Google Images of bedrooms, we’re training on idealized photos, not real world. It’s a biased set. Likewise for mugs, where the handles in images are almost all on the right side, not the left.

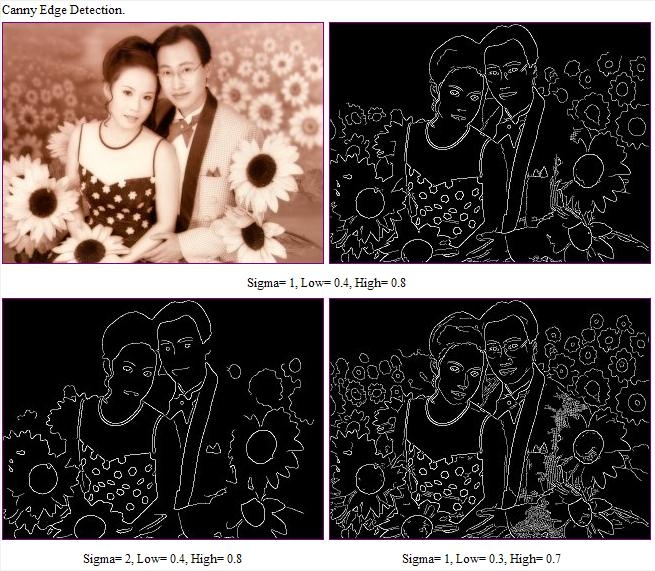

Another issue: The CANNY edge detector (for example) detects edges and throws a black and white reduction to the next level. “All the information is gone!” he says, showing that a messy set of white lines on black is in fact an image of a palace. [Maybe the White House?] (A different example of edge detection:)

/div>

/div>

Deep neural networks work well, and can be trained to recognize places in images, e.g., beach. hotel room, street. You train your neural net and it becomes a black box. E.g., how can it recognize that a bedroom is in fact a hotel room? Maybe it’s the lamp? But you trained it to recognize places, not objects. It works but we don’t know how.

When training a system on place detection, we found some units in some layers were in fact doing object detection. It was finding the lamps. Another unit was detecting cars, another detected roads. This lets us interpret the neural networks’ work. In this case, you could put names to more than half of the units.

How to quantify this? How is the representation being built? For this: Network dissection. This shows that when training a network on places, objects emerges. “The network may be doing something more interesting than your task.”The network may be doing something more interesting than your task: object detection is harder than place detection.

We currently train systems by gathering labeled data. But small children learn without labels. Children are self-supervised systems. So, take in the rgb values of frames of a movie, and have the system predict the sounds. When you train a system this way, it kind of works. If you want to predict the ambient sounds of a scene, you have to be able to recognize the objects, e.g., the sound of a car. To solve this, the network has to do object detection. That’s what they found when they looked into the system. It was doing face detection without having been trained to do that. It also detects baby faces, which make a different type of sound. It detects waves. All through self-supervision.

Other examples: On the basis of one segment, predict the next in the sequence. Colorize images. Fill in an empty part of an image. These systems work, and do so by detecting objects without having been trained to do so.

Conclusions: 1. Neural networks build represntations that are sometimes interpretatble. 2. The rep might solve a task that’s evem ore interesting than the primary task. 3. Understanding how these reps are built might allow new approaches for unsupervised or self-supervised training.

[liveblog][PAIR] Maya Gupta on controlling machine learning

At the PAIR symposium. Maya Gupta runs Glass Box at Google, which looks at black box issues. She is talking about how we can control machine learning to do what we want

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

The core idea of machine learning are its role models, i.e., its training data. That’s the best way to control machine learning. She’s going to address by looking at the goals of controlling machine learning.

A simple example of monotinicity. Let’s say we’re tring to recommend nearby coffee shops. So we use data about the happiness of customers and distance from the shop. We can fit the model ot a linear model. Or we can fit it to a curve, which works better for nearby shops but goes wrong for distant shops. That’s fine for Tokyo but terrible for Montana because it’ll be sending people many miles away. A montonic example says we don’t want to do that. This controls ML to make it more useful. Conclusion: the best ML has the right examples and the right kinds of flexibility. [Hard to blog this without her graphics. Sorry.] See “Deep Lattice Networks for Learning Partial Monotonic Models,” NIPS 2017; it will soon by on the TensorFlow site.

“The best way to do things for practitioners is to work next to them”The best way to do things for practitioners is to work next to them.

A fairness goal: e.g., we want to make sure that accuracy in India is the same as accuracy in the US. So, add a constraint that says what accuracy levels we want. Math lets us do that.

Another fairness goal: the rate of positive classifications should be the same in India as in the US, e.g., rate of students being accepted to a college. In one example, there is an accuracy trade-off in order to get fairness. Her attitude: Just tell us what you want and we’ll do it

Fairness isn’t always relative. E.g., E.g., minimize classification errors differently for different regions. You can’t always get what you want, but you sometimes can or can get close. [paraphrase!] See fatml.org

It can be hard to state what we want, but we can look at examples. E.g., someone hand-labels 100 examples. That’s not enough as training date, but we can train the system so that it classifies those 100 at something like 95% accuracy.

Sometimes you want to improve an existing ML system. You don’t want to retrain because you like the old results. So, you can add in a constraint such as keep the differences from the original classifications to less than 2%.

You can put all of the above together. See “Satisfying Real-World Goals with Dataset Constraints,” NIPS, 2016. Look for tools coming to TensorFlow.

Some caveats about this approach.

First, to get results that are the same for men and women, the data needs to come with labels. But sometimes there are privacy issues about that. “Can we make these fairness goals work without labels? ”Can we make these fairness goals work without labels? Research so far says the answer is messy. E.g., if we make ML more fair for gender (because you have gender labels), it may also make it fairer for race.

Second, this approach relies on categories, but individuals don’t always fit into categories. But, usually if you get things right on categories, it usually works out well in the blended examples.

Maya is an optimist about ML. “But we need more work on the steering wheel.” We’re not always sure we want to go with this technology. And we need more human-usable controls.

[liveblog][PAIR] Karrie Karahalios

At the Google PAIR conference, Karrie Karahalios is going to talk about how people make sense of their world and lives online. (This is an information-rich talk, and Karrie talks quickly, so this post is extra special unreliable. Sorry. But she’s great. Google her work.)

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Today, she says, people want to understand how the information they see comes to them. Why does it vary? “Why do you get different answers depending on your wifi network? ”Why do you get different answers depending on your wifi network? These algorithms also affect our personal feeds, e.g., Instagram and Twitter; Twitter articulates it, but doesn’t tell you how it decides what you will see

In 2012, Christian Sandvig and [missed first name] Holbrook were wondering why they were getting odd personalized ads in their feeds. Most people were unaware that their feeds are curated: only 38% were aware of this in 2012. Thsoe who were aware became aware through “folk theories”: non-authoritative explanations that let them make sense of their feed. Four theories:

1. Personal engagement theory: If you like and click on someone, the more of that person you’ll see in your feed. Some people were liking their friends’ baby photos, but got tired of it.

2. Global population theory: If lots of people like, it will show up on more people’s feeds.

3. Narcissist: You’ll see more from people who are like you.

4. Format theory: Some types of things get shared more, e.g., photos or movies. But people didn’t get

Kempton studied thermostats in the 1980s. People either thought of it as a switch or feedback, or as a valve. He looked at their usage patterns. Regardless of which theory, they made it work for them.

She shows an Orbitz page that spits out flights. You see nothing under the hood. But someone found out that if you use a Mac, your prices were higher. People started using designs that shows the seams. So, Karrie’s group created a view that showed the feed and all the content from their network, which was three times bigger than what they saw. For many, this was like awakening from the Matrix. More important, they realized that their friends weren’t “liking” or commenting because the algorithm had kept their friends from seeing what they posted.

Another tool shows who you are seeing posts from and who you are not. This was upsetting for many people.

After going through this process people came up with new folk theories. E.g., they thought it must be FB’s wisdom in stripping out material that’s uninteresting one way or another. [paraphrasing].

They let them configure who they saw, which led many people to say that FB’s algorithm is actually pretty good; there was little to change.

Are these folk theories useful? Only two: personal engagement and control panel, because these let you do something. But there are poor tweaking tools.

How to embrace folk theories: 1. Algorithm probes, to poke and prod. “It would be great, Karrie says, to have open APIs so people could create tools”(It would be great to have open APIs so people could create tools. FB deprecated it.) 2. Seamful interfaces to geneate actionable folk theories. Tuning to revert of borrow?

Another control panel UI, built by Eric Gilbert, uses design to expose the algorithms.

She ends with a wuote form Richard Dyer: “All technolgoies are at once technical and also always social…”

[liveblog][PAIR] Jess Holbrook

I’m at the PAIR conference at Google. Jess Holbrook is UX lead for AI. He’s talking about human-centered machine learning.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

“We want to put AI into the maker toolkit, to help you solve real problems.” One of the goals of this: “How do we democratize AI and change what it means to be an expert in this space?” He refers to a blog post he did with Josh Lovejoy about human-centered ML. He emphasizes that we are right at the beginning of figuring this stuff out.

Today, someone finds a data set, and finds a problem that that set could solve. You train a model and look at its performance, and decided if it’s good enough. And then you launch “The world’s first smart X. Next step: profit.” But what if you could do this in a human-centered way?

Human-centered design means: 1. Staying proximate. Know your users. 2. Inclusive divergence: reach out and bring in the right people. 3. Shared definition of success: what does it mean to be done? 4. Make early and often: lots of prototyping. 5. Iterate, test, throw it away.

So, what would a human-centered approach to ML look like? He gives some examples.

Instead of trying to find an application for data, human-centered ML finds a problem and then finds a data set appropriate for that problem. E.g., diagnosis plant diseases. Assemble tagged photos of plants. Or, use ML to personalize a “balancing spoon” for people with Parkinsons.

Today, we find bias in data sets after a problem is discoered. E.g., ProPublica’s article exposing the bias in ML recidivism predictions. Instead, proactively inspect for bias, as per JG’s prior talk.

Today, models personalize experiences, e.g., keyboards that adapt to you. With human-centered ML, people can personalize their models. E.g., someone here created a raccoon detector that uses images he himself took and uploaded, personalized to his particular pet raccoon.

Today, we have to centralize data to get results. “With human-centered ML we’d also have decentralized, federated learning”With human-centered ML we’d also have decentralized, federated learning, getting the benefits while maintaining privacy.

Today there’s a small group of ML experts. [The photo he shows are all white men, pointedly.] With human-centered ML, you get experts who have non-ML domain expertise, which leads to more makers. You can create more diverse, inclusive data sets.

Today, we have narrow training and testing. With human-centered ML, we’ll judge instead by how systems change people’s lives. E.g., ML for the blind to help them recognize things in their environment. Or real-time translation of signs.

Today, we do ML once. E.g., PicDescBot tweets out amusing misfires of image recognition. With human-centered ML we’ll combine ML and teaching. E.g., a human draws an example, and the neural net generates alternatives. In another example, ML improved on landscapes taken by StreetView, where it learned what is an improvement from a data set of professional photos. Google auto-suggest ML also learns from human input. He also shows a video of Simone Giertz, “Queen of the Shitty Robots.”

He references Amanda Case: “Expanding people’s definion of normal” is almost always a gradual process.

[The photo of his team is awesomely diverse.]

[liveblog] Google AI Conference

I am, surprisingly, at the first PAIR (People + AI Research) conference at Google, in Cambridge. There are about 100 people here, maybe half from Google. The official topic is: “How do humans and AI work together? How can AI benefit everyone?” I’ve already had three eye-opening conversations and the conference hasn’t even begun yet. (The conference seems admirably gender-balanced in audience and speakers.)

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

The great Martin Wattenberg (half of Wattenberg – Fernanda Viéga) kicks it off, introducing John Giannandrea, a VP at Google in charge of AI, search, and more. “We’ve been putting a lot of effort into using inclusive data sets.”

John says that every vertical will affected by this. “It’s important to get the humanistic side of this right.” He says there are 1,300 languages spoken world wide, so if you want to reach everyone with tech, machine learning can help. Likewise with health care, e.g. diagnosing retinal problems caused by diabetes. Likewise with social media.

PAIR intends to use engineering and analysis to augment expert intelligence, i.e., professionals in their jobs, creative people, etc. And “how do we remain inclusive? How do we make sure this tech is available to everyone and isn’t used just by an elite?”

He’s going to talk about interpretability, controllability, and accessibility.

Interpretability. Google has replaced all of its language translation software with neural network-based AI. He shows an example of Hemingway translated into Japanese and then back into English. It’s excellent but still partially wrong. A visualization tool shows a cluster of three strings in three languages, showing that the system has clustered them together because they are translations of the same sentence. [I hope I’m getting this right.] Another example: a photo of integrated gradients hows that the system has identified a photo as a fire boat because of the streams of water coming from it. “We’re just getting started on this.” “We need to invest in tools to understand the models.”

Controllability. These systems learn from labeled data provided by humans. “We’ve been putting a lot of effort into using inclusive data sets.” He shows a tool that lets you visuallly inspect the data to see the facets present in them. He shows another example of identifying differences to build more robust models. “We had people worldwide draw sketches. E.g., draw a sketch of a chair.” In different cultures people draw different stick-figures of a chair. [See Eleanor Rosch on prototypes.] And you can build constraints into models, e.g., male and female. [I didn’t get this.]

Accessibility. Internal research from Youtube built a model for recommending videos. Initially it just looked at how many users watched it. You get better results if you look not just at the clicks but the lifetime usage by users. [Again, I didn’t get that accurately.]

Google open-sourced Tensor Flow, Google’s AI tool. “People have been using it from everything to to sort cucumbers, or to track the husbandry of cows.”People have been using it from everything to to sort cucumbers, or to track the husbandry of cows. Google would never have thought of this applications.

AutoML: learning to learn. Can we figure out how to enable ML to learn automatically. In one case, it looks at models to see if it can create more efficient ones. Google’s AIY lets DIY-ers build AI in a cardboard box, using Raspberry Pi. John also points to an Android app that composes music. Also, Google has worked with Geena Davis to create sw that can identify male and female characters in movies and track how long each speaks. It discovered that movies that have a strong female lead or co-lead do better financially.

He ends by emphasizing Google’s commitment to open sourcing its tools and research.

Fernanda and Martin talk about the importance of visualization. (If you are not familiar with their work, you are leading deprived lives.) When F&M got interested in ML, they talked with engineers. ““ML is very different. Maybe not as different as software is from hardware. But maybe. ”ML is very different. Maybe not as different as software is from hardware. But maybe. We’re just finding out.”

M&F also talked with artists at Google. He shows photos of imaginary people by Mike Tyka created by ML.

This tells us that AI is also about optimizing subjective factors. ML for everyone: Engineers, experts, lay users.

Fernanda says ML spreads across all of Google, and even across Alphabet. What does PAIR do? It publishes. It’s interdisciplinary. It does education. E.g., TensorFlow Playground: a visualization of a simple neural net used as an intro to ML. They opened sourced it, and the Net has taken it up. Also, a journal called Distill.pub aimed at explaining ML and visualization.

She “shamelessly” plugs deeplearn.js, tools for bringing AI to the browser. “Can we turn ML development into a fluid experience, available to everyone?”

What experiences might this unleash, she asks.

They are giving out faculty grants. And expanding the Brain residency for people interested in HCI and design…even in Cambridge (!).

August 13, 2017

Machine learning cocktails

Inspired by fabulously wrong paint colors that Janelle Shane’s generated by running existing paint names through a machine learning system, and then by an hilarious experiment in dog breed names by my friend Matthew Battles, I decided to run some data through a beginner’s machine learning algorithm by karpathy.

I fed a list of cocktail names in as data to an unaltered copy of karpathy’s code. After several hundred thousand iterations, here’s a highly curated list of results:

- French Connerini Mot

- Freside

- Rumibiipl

- Freacher

- Agtaitane

- Black Silraian

- Brack Rickwitr

- Hang

- boonihat

- Tuxon

- Bachutta B

- My Faira

- Blamaker

- Salila and Tonic

- Tequila Sou

- Iriblon

- Saradise

- Ponch

- Deiver

- Plaltsica

- Bounchat

- Loner

- Hullow

- Keviy Corpse der

- KreckFlirch 75

- Favoyaloo

- Black Ruskey

- Avigorrer

- Anian

- Par’sHance

- Salise

- Tequila slondy

- Corpee Appant

- Coo Bogonhee

- Coakey Cacarvib

- Srizzd

- Black Rosih

- Cacalirr

- Falay Mund

- Frize

- Rabgel

- FomnFee After

- Pegur

- Missoadi Mangoy Rpey Cockty e

- Banilatco

- Zortenkare

- Riscaporoc

- Gin Choler Lady or Delilah

- Bobbianch 75

- Kir Roy Marnin Puter

- Freake

- Biaktee

- Coske Slommer Roy Dog

- Mo Kockey

- Sane

- Briney

- Bubpeinker

- Rustin Fington Lang T

- Kiand Tea

- Malmooo

- Batidmi m

- Pint Julep

- Funktterchem

- Gindy

- Mod Brandy

- Kkertina Blundy Coler Lady

- Blue Lago’sil

- Mnakesono Make

- gizzle

- Whimleez

- Brand Corp Mook

- Nixonkey

- Plirrini

- Oo Cog

- Bloee Pluse

- Kremlin Colone Pank

- Slirroyane Hook

- Lime Rim Swizzle

- Ropsinianere

- Blandy

- Flinge

- Daago

- Tuefdequila Slandy

- Stindy

- Fizzy Mpllveloos

- Bangelle Conkerish

- Bnoo Bule Carge Rockai Ma

- Biange Tupilang Volcano

- Fluffy Crica

- Frorc

- Orandy Sour

- The candy Dargr

- SrackCande

- The Kake

- Brandy Monkliver

- Jack Russian

- Prince of Walo Moskeras

- El Toro Loco Patyhoon

- Rob Womb

- Tom and Jurr Bumb

- She Whescakawmbo Woake

- Gidcapore Sling

- Mys-Tal Conkey

- Bocooman Irion anlis

- Ange Cocktaipopa

- Sex Roy

- Ruby Dunch

- Tergea Cacarino burp Komb

- Ringadot

- Manhatter

- Bloo Wommer

- Kremlin Lani Lady

- Negronee Lince

- Peady-Panky on the Beach

Then I added to the original list of cocktails a list of Western philosophers. After about 1.4 million iterations, here’s a curated list:

- Wotticolus

- Lobquidibet

- Mores of Cunge

- Ruck Velvet

- Moscow Muáred

- Elngexetas of Nissone

- Johkey Bull

- Zoo Haul

- Paredo-fleKrpol

- Whithetery Bacady Mallan

- Greekeizer

- Frellinki

- Made orass

- Wellis Cocota

- Giued Cackey-Glaxion

- Mary Slire

- Robon Moot

- Cock Vullon Dases

- Loscorins of Velayzer

- Adg Cock Volly

- Flamanglavere Manettani

- J.N. tust

- Groscho Rob

- Killiam of Orin

- Fenck Viele Jeapl

- Gin and Shittenteisg Bura

- buzdinkor de Mar

- J. Apinemberidera

- Nickey Bull

- Fishomiunr Slmester

- Chimio de Cuckble Golley

- Zoo b Revey Wiickes

- P.O. Hewllan o

- Hlack Rossey

- Coolle Wilerbus

- Paipirista Vico

- Sadebuss of Nissone

- Sexoo

- Parodabo Blazmeg

- Framidozshat

- Almiud Iquineme

- P.D. Sullarmus

- Baamble Nogrsan

- G.W.J. . Malley

- Aphith Cart

- C.G. Oudy Martine ram

- Flickani

- Postine Bland

- Purch

- Caul Potkey

- J.O. de la Matha

- Porel

- Flickhaitey Colle

- Bumbat

- Mimonxo

- Zozky Old the Sevila

- Marenide Momben Coust Bomb

- Barask’s Spacos Sasttin

- Th mlug

- Bloolllamand Royes

- Hackey Sair

- Nick Russonack

- Fipple buck

- G.W.F. Heer Lach Kemlse Male

Yes, we need not worry about human bartenders, cocktail designers, or philosophers being replaced by this particular algorithm. On the other hand, this is algorithm consists of a handful of lines of code and was applied blindly by a person dumber than it. Presumably SkyNet — or the next version of Microsoft Clippy — will be significantly more sophisticated than that.

May 15, 2017

[liveblog][AI] AI and education lightning talks

Sara Watson, a BKC affiliate and a technology critic, is moderating a discussion at the Berkman Klein/Media Lab AI Advance.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Karthik Dinakar at the Media Lab points out what we see in the night sky is in fact distorted by the way gravity bends light, which Einstein called a “gravity lens.” Same for AI: The distortion is often in the data itself. Karthik works on how to help researchers recognize that distortion. He gives an example of how to capture both cardiologist and patient lenses to better to diagnose women’s heart disease.

Chris Bavitz is the head of BKC’s Cyberlaw Clinic. To help Law students understand AI and tech, the Clinic encourages interdisciplinarity. They also help students think critically about the roles of the lawyer and the technologist. The clinic prefers early relationships among them, although thinking too hard about law early on can diminish innovation.

He points to two problems that represent two poles. First, IP and AI: running AI against protected data. Second, issues of fairness, rights, etc.

Leah Plunkett, is a professor at Univ. New Hampshire Law School and is a BKC affiliate. Her topic: How can we use AI to teach? She points out that if Tom Sawyer were real and alive today, he’d be arrested for what he does just in the first chapter. Yet we teach the book as a classic. We think we love a little mischief in our lives, but we apparently don’t like it in our kids. We kick them out of schools. E.g., of 49M students in public schools in 20-11, 3.45M were suspended, and 130,000 students were expelled. These disproportionately affect children from marginalized segments.

Get rid of the BS safety justification and the govt ought to be teaching all our children without exception. So, maybe have AI teach them?

Sarah: So, what can we do?

Chris: We’re thinking about how we can educate state attorneys general, for example.

Karthik: We are so far from getting users, experts, and machine learning folks together.

Leah: Some of it comes down to buy-in and translation across vocabularies and normative frameworks. It helps to build trust to make these translations better.

[I missed the QA from this point on.]

[liveblog] AI Advance opening: Jonathan Zittrain and lightning talks

I’m at a day-long conference/meet-up put on by the Berkman Klein Center‘s and MIT Media Lab‘s “AI for the Common Good” project.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Jonathan Zittrain gives an opening talk. Since we’re meeting at Harvard Law, JZ begins by recalling the origins of what has been called “cyber law,” which has roots here. Back then, the lawyers got to the topic first, and thought that they could just think their way to policy. We are now at another signal moment as we are in a frenzy of building new tech. This time we want instead to involve more groups and think this through. [I am wildly paraphrasing.]

JZ asks: What is it that we intuitively love about human judgment, and are we willing to insist on human judgments that are worse than what a machine would come up with? Suppose for utilitarian reasons we can cede autonomy to our machines — e.g., autonomous cars — shouldn’t we? And what do we do about maintaining local norms? E.g., “You are now entering Texas where your autonomous car will not brake for pedestrians.”

“Should I insist on being misjudged by a human judge because that’s somehow artesinal?” when, ex hypothesis, an AI system might be fairer.

Autonomous systems are not entirely new. They’re bringing to the fore questions that have always been with us. E.g., we grant a sense of discrete intelligence to corporations. E.g., “McDonald’s is upset and may want to sue someone.”

[This is a particularly bad representation of JZ’s talk. Not only is it wildly incomplete, but it misses the through-line and JZ’s wit. Sorry.]

Lightning Talks

Finale Doshi-Velez is particularly interested in interpretable machine learning (ML) models. E.g., suppose you have ten different classifiers that give equally predictive results. Should you provide the most understandable, all of them…?

Why is interpretability so “in vogue”? Suppose non-interpretable AI can do something better? In most cases we don’t know what “better” means. E.g., someone might want to control her glucose level, but perhaps also to control her weight, or other outcomes? Human physicians can still see things that are not coded into the model, and that will be the case for a long time. Also, we want systems that are fair. This means we want interpretable AI systems.

How do we formalize these notions of interpretability? How do we do so for science and beyond? E.g., what is a legal “right to explanation

” mean? She is working with Sam Greshman on how to more formally ground AI interpretability in the cognitive science of explanation.

Vikash Mansinghka leads the eight-person Probabilistic Computing project at MIT. They want to build computing systems that can be our partners, not our replacements. We have assumed that the measure of success of AI is that it beats us at our own game, e.g., AlphaGo, Deep Blue, Watson playing Jeopardy! But games have clearly measurable winners.

His lab is working on augmented intelligence that gives partial solutions, guidelines and hints that help us solve problems that neither system could solve on their own. The need for these systems are most obvious in large-scale human interest projects, e.g., epidemiology, economics, etc. E.g., should a successful nutrition program in SE Asia be tested in Africa too? There are many variables (including cost). BayesDB, developed by his lab, is “augmented intelligence for public interest data science.”

Traditional computer science, computing systems are built up from circuits to algorithms. Engineers can trade off performance for interpretability. Probabilisitic systems have some of the same considerations. [Sorry, I didn’t get that last point. My fault!]

John Palfrey is a former Exec. Dir. of BKC, chair of the Knight Foundation (a funder of this project) and many other things. Where can we, BKC and the Media Lab, be most effective as a research organization? First, we’ve had the most success when we merge theory and practice. And building things. And communicating. Second, we have not yet defined the research question sufficiently. “We’re close to something that clearly relates to AI, ethics and government” but we don’t yet have the well-defined research questions.

The Knight Foundation thinks this area is a big deal. AI could be a tool for the public good, but it also might not be. “We’re queasy” about it, as well as excited.

Nadya Peek is at the Media Lab and has been researching “macines that make machines.” She points to the first computer-controlled machine (“Teaching Power Tools to Run Themselves“) where the aim was precision. People controlled these CCMs: programmers, CAD/CAM folks, etc. That’s still the case but it looks different. Now the old jobs are being done by far fewer people. But the spaces between doesn’t always work so well. E.g., Apple can define an automatiable workflow for milling components, but if you’re student doing a one-off project, it can be very difficult to get all the integrations right. The student doesn’t much care about a repeatable workflow.

Who has access to an Apple-like infrastructure? How can we make precision-based one-offs easier to create? (She teaches a course at MIT called “How to create a machine that can create almost anything.”)

Nathan Mathias, MIT grad student with a newly-minted Ph.D. (congrats, Nathan!), and BKC community member, is facilitating the discussion. He asks how we conceptualize the range of questions that these talks have raised. And, what are the tools we need to create? What are the social processes behind that? How can we communicate what we want to machines and understand what they “think” they’re doing? Who can do what, where that raises questions about literacy, policy, and legal issues? Finally, how can we get to the questions we need to ask, how to answer them, and how to organize people, institutions, and automated systems? Scholarly inquiry, organizing people socially and politically, creating policies, etc.? How do we get there? How can we build AI systems that are “generative” in JZ’s sense: systems that we can all contribute to on relatively equal terms and share them with others.

Nathan: Vikash, what do you do when people disagree?

Vikash: When you include the sources, you can provide probabilistic responses.

Finale: When a system can’t provide a single answer, it ought to provide multiple answers. We need humans to give systems clear values. AI things are not moral, ethical things. That’s us.

Vikash: We’ve made great strides in systems that can deal with what may or may not be true, but not in terms of preference.

Nathan: An audience member wants to know what we have to do to prevent AI from repeating human bias.

Nadya: We need to include the people affected in the conversations about these systems. There are assumptions about the independence of values that just aren’t true.

Nathan: How can people not close to these systems be heard?

JP: Ethan Zuckerman, can you respond?

Ethan: One of my colleagues, Joy Buolamwini, is working on what she calls the Algorithmic Justice League, looking at computer vision algorithms that don’t work on people of color. In part this is because the tests use to train cv systems are 70% white male faces. So she’s generating new sets of facial data that we can retest on. Overall, it’d be good to use test data that represents the real world, and to make sure a representation of humanity is working on these systems. So here’s my question: We find co-design works well: bringing in the affected populations to talk with the system designers?

[Damn, I missed Yochai Benkler‘s comment.]

Finale: We should also enable people to interrogate AI when the results seem questionable or unfair. We need to be thinking about the proccesses for resolving such questions.

Nadya: It’s never “people” in general who are affected. It’s always particular people with agendas, from places and institutions, etc.

May 7, 2017

Predicting the tides based on purposefully false models

Newton showed that the tides are produced by the gravitational pull of the moon and the Sun. But, as a 1914 article in Scientific American pointed out, if you want any degree of accuracy, you have to deal with the fact that “the earth is not a perfect sphere, it isn’t covered with water to a uniform form depth, it has many continents and islands and sea passages of peculiar shapes and depths, the earth does not travel about the sun in a circular path, and earth, sun and moon are not always in line. The result is that two tides are rarely the same for the same place twice running, and that tides differ from each other enormously in both times and in amplitude.”

So, we instead built a machine of brass, steel and mahogany. And instead of trying to understand each of the variables, Lord Kelvin postulated “a very respectable number” of fictitious suns and moons in various positions over the earth, moving in unrealistically perfect circular orbits, to account for the known risings and fallings of the tide, averaging readings to remove unpredictable variations caused by weather and “freshets.” Knowing the outcomes, he would nudge a sun or moon’s position, or add a new sun or moon, in order to get the results to conform to what we know to be the actual tidal measurements. If adding sea serpents would have helped, presumably Lord Kelvin would have included them as well.

The first mechanical tide-predicting machines using these heuristics were made in England. In 1881, one was created in the United States that was used by the Coast and Geodetic Survey for twenty-seven years.

Then, in 1914, it was replaced by a 15,000-piece machine that took “account of thirty-seven factors or components of a tide” (I wish I knew what that means) and predicted the tide at any hour. It also printed out the information rather than requiring a human to transcribe it from dials. “Unlike the human brain, this one cannot make a mistake.”

This new model was more accurate, with greater temporal resolution. But it got that way by giving up on predicting the actual tide, which might vary because of the weather. We simply accept the unpredictability of what we shall for the moment call “reality.” That’s how we manage in a world governed by uniform laws operating on unpredictably complex systems.

It is also a model that uses the known major causes of average tides — the gravitational effects of the sun and moon — but that feels fine about fictionalizing the model until it provides realistic results. This makes the model incapable of being interrogated about the actual causes of the tide, although we can tinker with it to correct inaccuracies. In this there is a very rough analogy — and some disanalogies — with some instances of machine learning.

April 19, 2017

Alien knowledge

Medium has published my long post about how our idea of knowledge is being rewritten, as machine learning is proving itself to be more accurate than we can be, in some situations, but achieves that accuracy by “thinking” in ways that we can’t follow.

This is from the opening section:

We are increasingly relying on machines that derive conclusions from models that they themselves have created, models that are often beyond human comprehension, models that “think” about the world differently than we do.

But this comes with a price. This infusion of alien intelligence is bringing into question the assumptions embedded in our long Western tradition. We thought knowledge was about finding the order hidden in the chaos. We thought it was about simplifying the world. It looks like we were wrong. Knowing the world may require giving up on understanding it.