May 15, 2017

[liveblog][AI] Perspectives on community and AI

Chelsea Barabas is moderating a set of lightning talks at the AI Advance, aat Berkman Klein and MIT Media Lab.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Lionel Brossi recounts growing up in Argentina and the assumption that all boys care about football. He moved to Chile which is split between people who do and do not watch football. “Humans are inherently biased.” So, our AI systems are likely to be biased. Cognitive science has shown that the participants in their studies tend to be WEIRD: western, educated, industrialized, rich and developed. Also straight and white. He references Kate Crawford‘s “AI’s White Guy Problem.” We need not only diverse teams of developers, but also to think about how data can be more representative. We also need to think about the users. One approach is work on goal centered design.

If we ever get to unbiased AI, Borges‘ statement, “The original is unfaithful to the translation” may apply.

Chelsea: What is an inclusive way to think of cross-border countries?

Lionel: We need to co-design with more people.

Madeline Elish is at Data and Society and an anthropology of technology grad student at Columbia. She’s met designers who thought it might be a good to make a phone run faster if you yell at it. But this would train children to yell at things. What’s the context in which such designers work? She and Tim Hwang set about to build bridges between academics and businesses. They asked what designers see as their responsibility for the social implications of their work. They found four core challenges:

1. Assuring users perceive good intentions

2. Protecting privacy

3. Long term adoption

4. Accuracy and reliability

She and Tim wrote An AI Pattern Language [pdf] about the frameworks that guide design. She notes that none of them were thinking about social justice. The book argues that there’s a way to translate between the social justice framework and, for example, the accuracy framework.

Ethan Zuckerman: How much of the language you’re seeing feels familiar from other hype cycles?

Madeline: Tim and I looked at the history of autopilot litigation to see what might happen with autonomous cars. We should be looking at Big Data as the prior hype cycle.

Yarden Katz is at the BKC and at the Dept. of Systems Biology at Harvard Medical School. He talks about the history of AI, starting with 1958 claim about translation machine. 1966: Minsky Then there was an AI funding winter, but now it’s big again. “Until recently, AI was a dirty word.”

Today we use it schizophrenically: for Deep Learning or in a totally diluted sense as something done by a computer. “AI” now seems to be a branding strategy used by Silicon Valley.

“AI’s history is diverse, messy, and philosophical.” If complexit is embraced, “AI” might not be a useful caregory for policy. So we should go basvk to the politics of technology:

1. who controls the code/frameworks/data

2. Is the system inspectable/open?

3. Who sets the metrics? Who benefits from them?

The media are not going to be the watchdogs because they’re caught up in the hype. So who will be?

Q: There’s a qualitative difference in the sort of tasks now being turned over to computers. We’re entrusting machines with tasks we used to only trust to humans with good judgment.

Yarden: We already do that with systems that are not labeled AI, like “risk assessment” programs used by insurance companies.

Madeline: Before AI got popular again, there were expert systems. We are reconfiguring our understanding, moving it from a cognition frame to a behavioral one.

Chelsea: I’ve been involved in co-design projects that have backfired. These projects have sometimes been somewhat extractive: going in, getting lots of data, etc. How do we do co-design that are not extractive but that also aren’t prohibitively expensive?

Nathan: To what degree does AI change the dimensions of questions about explanation, inspectability, etc.

Yarden: The promoters of the Deep Learning narrative want us to believe you just need to feed in lots and lots of data. DL is less inspectable than other methods. DL is not learning from nothing. There are open questions about their inductive power.

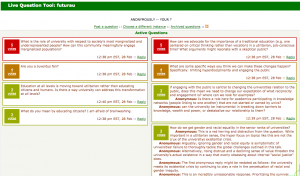

Amy Zhang and Ryan Budish give a pre-alpha demo of the AI Compass being built at BKC. It’s designed to help people find resources exploring topics related to the ethics and governance of AI.