June 10, 2012

Why is knowledge so funny?

…at least on the web it tends to be. That in any case is the contention of my latest column in KMworld.

June 10, 2012

…at least on the web it tends to be. That in any case is the contention of my latest column in KMworld.

June 9, 2012

More than a dozen universities are holding bake sales for NASA. The aim is to raise awareness, not money.

To me, NASA is a bit like a public library: No matter what, you want your town and your country to visibly declare their commitment to the value of human curiosity.

In other science news, attempts to replicate the faster-than-light neutrino results have confirmed that the spunky little buggers obey the universal traffic limit.

The system works! Even if you don’t screw in the optical cables tightly.

June 7, 2012

[Note that this is cross posted at the new Digital Scholarship at Harvard blog.]

Ralph Schroeder and Eric Meyer of the Oxford Internet Institute are giving a talk sponsored by the Harvard Library on Internet, Science, and Transformations of knowledge.

|

NOTE: Live-blogging. Getting things wrong. Missing points. Omitting key information. Introducing artificial choppiness. Over-emphasizing small matters. Paraphrasing badly. Not running a spellpchecker. Mangling other people’s ideas and words. You are warned, people. |

Ralph begins by defining e-research as “Research using digital tools and digital data for the distributed and collaborative production of knowledge.” He points to knowledge as the contentious term. “But we’re going to take a crack at why computational methods are such an important part of knowledge.” They’re going to start with theory and then move to cases.

Over the past couple of decades, we’ve moved from talking about supercomputing to the grid to Web 2.0 to clouds and now Big Data, Ralph says. There is continuity, however: it’s all e-research, and to have a theory of how e-research works, you need a few components: 1. Computational manipulability (mathematization) and 2. The social-technical forces that drive that.

Computational manipulability. This is important because mathematics enables consensus and thus collaboration. “High consensus, rapid discovery.”

Research technologies and driving forces. The key to driving knowledge is research technologies, he says. I.e., machines. You also need an organizational component.

Then you need to look at how that plays out in history, physics, astronomy, etc. Not all fields are organized in the same way.

Eric now talks, beginning with a quote from a scholar who says he now has more information then he needs, all without rooting around in libraries. But others complain that we are not asking new enough questions.

He begins with the Large Hadron Collider. It takes lots of people to build it and then to deal with the data it generates. Physics is usually cited as the epitome of e-research. It is the exemplar of how to do big collaboration, he says.

Distributed computation is a way of engaging citizens in science, he says. E.g. Galaxy Zoo, which engages citizens in classifying galaxies. Citizens have also found new types of galaxies (“green peas”), etc. there. Another example: the Genetic Association Information Network is trying to find the cause of bipolarism. It has now grown into a worldwide collaboration. Another: Structure of Populations, Levels of Abundance, and Status of Humpbacks (SPLASH), a project that requires human brains to match humpback tails. By collaboratively working on data from 500 scientists around the Pacific Rim, patterns of migration have emerged, and it was possible to come up with a count of humpbacks (about 15-17K). We may even be able to find out how long humpbacks live. (It’s a least 120 years because a harpoon head was found in one from a company that went out of business that long ago.)

Ralph looks at e-research in Sweden as an example. They have a major initiative under way trying to combine health data with population data. The Swedes have been doing this for a long time. Each Swede has a unique ID; this requires the trust of the population. The social component that engenders this trust is worth exploring, he says. He points to cases where IP rights have had to be negotiated. He also points to the Pynchon Wiki where experts and the crowd annotate Pynchon’s works. Also, Google Books is a source of research data.

Eric: Has Google taken over scholarly research? 70% of scholars use Google and 66% use Google Scholar. But in the humanities, 59% go to the library. 95% consult peers and experts — they ask people they trust. It’s true in the physical sciences too, he says, although the numbers vary some.

Eric says the digital is still considered a bit dirty as a research tool. If you have too many URLS in your footnotes it looks like you didn’t do any real work, or so people fear.

Ralph: Is e-research old wine in new bottles? Underlying all the different sorts of knowledge is mathematization: a shared symbolic language with which you can do things. You have a physical core that consists of computers around which lots of different scholars can gather. That core has changed over time, but all offer types of computational manipulability. The Pynchon Wiki just needs a server. The LHC needs to be distributed globally across sites with huge computing power. The machines at the core are constantly being refined. Different fields use this power differently, and focus their efforts on using those differences to drive their fields forward. This is true in literature and language as well. These research technologies have become so important since they enable researchers to work across domains. They are like passports across fields.

A scholar who uses this tech may gain social traction. But you also get resistance: “What are these guys doing with computing and Shakespeare?”

What can we do with this knowledge about how knowledge is changing? 1. We can inform funding decisions: What’s been happening in different fields, how they affected by social organizations, etc. 2. We need a multidisciplinary way of understanding e-research as a whole. We need more than case studies, Ralph says. We need to be aiming at developing a shared platform for understanding what’s going on. 3. Every time you use these techniques, you are either disintermediating data (e.g., Galaxy Zoo) or intermediating (biomedicine). 4. Given that it’s all digital, we as outsiders have tremendous opportunities to study it. We can analyze it. Which fields are moving where? Where are projects being funded and how are they being organized? You can map science better than ever. One project took a large chunk of academic journals and looked in real time at who is reading what, in what domain.

This lets us understand knowledge better, so we can work together better across departments and around the globe.

Q&A

Q: Sometimes you have to take a humanities approach to knowledge. Maybe you need to use some of the old systems investigations tools. Maybe link Twitter to systems thinking.

A: Good point. But caution: I haven’t seen much research on how the next generation is doing research and is learning. We don’t have the good sociology yet to see what difference that makes. Does it fragment their attention? Or is this a good thing?

Q: It’d be useful to know who borrows what books, etc., but there are restrictions in the US. How about in Great Britain?

A: If anything, it’s more restrictive in the UK. In the UK a library can’t even archive a web site without permission.

A: The example I gave of real time tracking was of articles, not books. Maybe someone will track usage at Google Books.

Q: Can you talk about what happens to the experience of interpreting a text when you have so much computer-generated data?

A: In the best cases, it’s both/and. E.g., you can’t read all the 19th century digitized newspapers, but you can compute against it. But you still need to approach it with a thought process about how to interpret it. You need both sets of skills.

A: If someone comes along and says it’s all statistics, the reply is that no one wants to read pure stats. They want to read stats put into words.

Q: There’s a science reader that lets you keep track of which papers are being read.

A: E.g., Mendeley. But it’s a self-selected group who use these tools.

Q: In the physical sciences, the more info that’s out there, it’s hard to tell what’s important.

A: One way to address it is to think about it as a cycle: as a field gets overwhelmed with info, you get tools to concentrate the information. But if you only look at a small piece of knowledge, what are you losing? In some areas, e.g., areas within physics, everyone knows everyone else and what everyone else is doing. Earth sciences is a much broader community.

[Interesting talk. It’s orthogonal to my own interests in how knowledge is becoming something that “lives” at the network level, and is thus being redefined. It’s interesting to me to see how this look when sliced through at a different angle.]

June 6, 2012

I learned yesterday from Robin Wendler (who worked mightily on the project) that Harvard’s library catalog dataset of 12.3M records has been bulk downloaded a thousand times, excluding the Web spiderings. That seems like an awful lot to me, and makes me happy.

The library catalog dataset comprises bibliographic records of almost all of Harvard Library’s gigantic collection. It’s available under a CC 0 public domain license for bulk download, and can be accessed through an API via the DPLA’s prototype platform. More info here.

June 4, 2012

Aaron Shaw has a very interesting post on what sure looks like contradictory instructions from the White House about whether we’re free to remix photos that have been released under a maximally permissive U.S. Government license. Aaron checked in with a Berkman mailing list where two theories are floated: It’s due to a PR reflex, or it’s an attempt to impose a contractual limitation on the work. There have been lots of other attempts to impose such limitations on reuse, so that “august” works don’t end up being repurposed by hate groups and pornographers; I don’t know if such limitations have any legal bite.

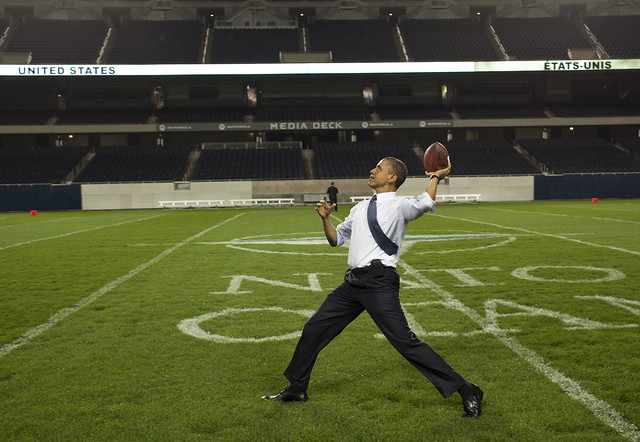

Dan Jones places himself clearly on the side of remixing. Here’s the White House original:

And here’s Dan’s gentle remix:

Hey look out Mr. President! A supposedly unauthorized derivative work is headed right toward you!! (alert: @aaronshaw) twitter.com/blanket/status…

— Daniel Dennis Jones (@blanket) June 4, 2012

Bring it, Holder! :)

June 2, 2012

Is it just me, or are we in a period when new distribution models are burgeoning? For example:

1. Kickstarter, of course, but not just for startups trying to kickstart their business. For example, Amanda Palmer joined the Louis CK club a couple of days ago by raising more than a million bucks there for her new album. (She got my $5 :) As AFP has explained, she is able to get this type of support from her fans because she treats her fans honestly, frankly, with respect, and most of all, with trust.

2. At VODO, you can get your indie movie distributed via bittorrent. If it starts taking off, VODO may feature it. VODO also works with sponsors to support you. From my point of view as a user, I torrented “E11,” a movie about rock climbing, for free, or I could have paid $5 to stream it for 10 days with the ability to share the deal with two other people. VODO may be thinking that bittorrenting is scary enough to many people that they’ll prefer to get it the easy way by paying $5. VODO tells you where your money is going (70% goes to the artist), and treats us with respect and trust.

3. I love Humble Bundle as a way of distributing indie games. Periodically the site offers a bundled set of five games for as much as you want to pay. When you check out, you’re given sliders so you can divvy up the amount as you want among the game developers, including sending some or all to two designated charities. If you pay more than the average (currently $7.82), you get a sixth game. Each Bundle is available for two weeks. They’ve sold 331,000 bundles in the past three days, which Mr. Calculator says comes to $2,588,420. All the games are all un-copy-protected and run on PCs and Macs. Buying a Humble Bundle is a great experience. You’re treated with respect. You are trusted. You have an opportunity to do some good by buying these games. And that’s very cool, since usually sites trying to sell you stuff act as if buying that stuff is the most important thing in the world.

4. I’m hardly the first to notice that Steam has what may be the best distribution system around for mass market entertainment. They’re getting users to pay for $60 games that they otherwise might have pirated by making it so easy to buy them, and by seeming to be on the customer’s side. You buy your PC game at their site, download it from them, and start it up from there. They frequently run crazy sales on popular games for a couple of days, and the game makers report that there is enough price elasticity that they make out well. If I were Valve (the owners of Steam), I’d be branching out into the delivery of mainstream movies.

There’s of course much much more going on. But that’s my point: We seem to be figuring out how to manage digital distribution in new and successful ways. The common threads seem to be: Treat your customers with respect. Trust them. Make it easy for them to do what they want to do with the content. Have a sense of perspective about what you’re doing. Let the artists and the fans communicate. Be on your customers’ side.

Put them all together and what do you have? Treat us like people who care about the works we’re buying, the artists who made them, about one another, and about the world beyond the sale.